(This is part 2 of a series on an AI generated TV show. Click here to check out part 1.) (Also – Part 3 is available!)

So, what do I mean when I say “the AI writes the story”?

My narrative generation strategy follows these steps:

- Generate an episode concept with an A and B plot.

- Break them down into scenes and merge them into one coherent narrative.

- Write more detailed summaries of each scene, and revise the scenes again.

- Write and edit the shooting script for each scene.

Let’s dive in!

Building Foundations

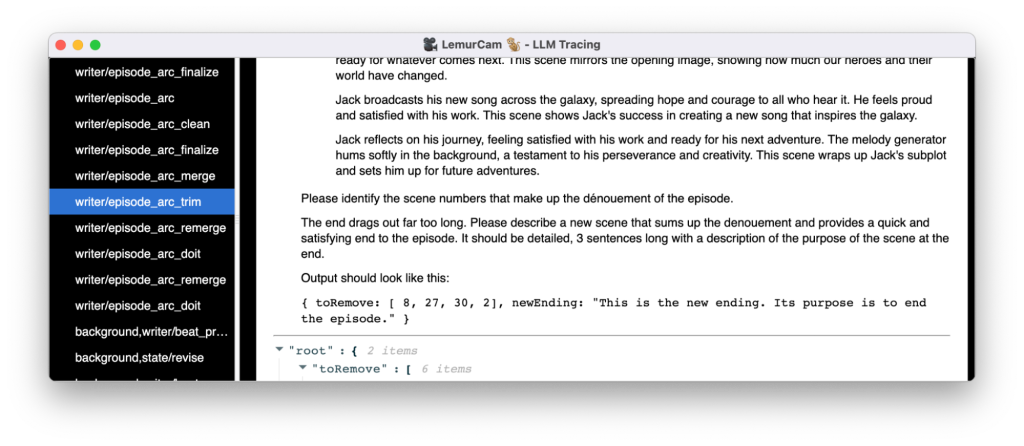

I wrote a library for managing LLM requests, and a tool (below) to view the requests in real time. It stores requests and results so they can be reused. It also allows the LLM to trigger further processing if appropriate. Then, I wrote a program on top of these systems to orchestrate generation of the narrative.

The above is a screenshot of the monitoring tool. On the left hand side is a list of all the requests made to the LLM. Each item in the list shows the prompts used in the request. On the right hand side, it shows the selected request’s information – the different messages submitted, the prompts used in each, the response from the LLM, and so on. If Markdown or JSON is present, it is formatted appropriately.

For the more technically minded, here’s some examples of what calling the AI looks like:

// Combine multiple prompts and insert related

// data where marked.

await dmSession.chat(["background", "dm/start"], {

background: await factDatabase.getSeedText(),

prompt,

});

// Process a prompt, but only use the results later.

// In this case, the LLM uses "dm/critique" to

// critique its story idea.

await dmSession.chat(["dm/critique"], {});

// Based on the critique, the LLM refines the story

// idea.

let beatSheet = await dmSession.chat(["dm/refine"], {});

My prompts are Markdown files, populated with information from the rest of the system:

Here is the background for a TV show: {{{background}}}

Please come up with a beat sheet for a sci-fi tv show;

focus on the theme/topic of "{{{prompt}}}".

The most common issue by far were small mistakes that kept important data from appearing. The prompt above works very poorly if {{{background}}} is blank! The monitoring tool is very useful to avoid this, but even so, these issues took a lot of time to resolve.

Episode generation is autonomous, but the show bible is human-made. The prompts and code that control the LLM are human-made, too. Each episode’s output is closely reviewed by humans. Because models often change, and each new episode tends to reveal bugs/weaknesses in the system, prompts get tweaked by humans, too. This is less and less necessary as more episodes are produced.

Consistency & Memory

Many iterations of On Screen were heavily self-contradictory. This was perhaps the biggest problem to overcome.

To demonstrate the problem, let’s consider a story about a space egg. In the first scene, character A has the egg. In the next scene, character B has the egg. The scene after that, A has it again. Finally, A and B discuss their plan to find the egg. This is technically a story, but not a very entertaining one.

I tried a lot of different approaches to fix this. I took a shot at a Smallville system by writing a text adventure engine and having the AI give commands like “go north”, “look at Steve”, “say hi”, and so on. I tried having the AI maintain a schedule for upcoming events, revise it if something unexpected happened, and write scenes based on each day’s events. I built a simple vector database, and an entity memory, too.

Early on, these structured approaches outperformed the more general “keep some notes as you write” approach. Older generation LLMs tended to lose or hallucinate information, so the notes would degrade as they revised them. Enforcing a structure helped avoid this, although in some cases at a very high time/dollar cost.

However, GPT-4 had a larger context size, and better recall and reasoning. It was sufficiently powerful to maintain general notes without loss or embellishment, and I was able to get good results without requiring additional structure.

Rewrites

To quote Neil Gaiman: “Once you’ve got to the end, and you know what happens, it’s your job to make it look like you knew exactly what you were doing all along.”

There are many ways to write stories, but for On Screen I focused on self-consistency and story arcs. Self-consistency because stories that don’t make any sense are boring and confusing. Story arcs so that stories would have a clear beginning, middle, and end, which the narrative would move through over the course of an episode.

Initial drafts from the AI (or even from people!) resemble a lumpy rug – they tend to be uneven in time, space, and interest. Some scenes are too slow, others cover too much, some are repetitive or just useless. There are inconsistencies, dropped threads, and contradictions.

We want our “rug” to lie flat – have good pacing, be consistent, maintain interest, follow through. So I used different techniques to push the lumps around, and ultimately flatten the rug. I use a few techniques for this:

Add/remove/reorder. At several points, the story is reviewed using a ReAct type approach. The AI generates a critique, then a list of instructions on what to add, remove, or modify. Finally, it uses the instructions to generate a new outline. This is like taking the rug, cutting it up, and stitching it back together to remove lumps.

Rolling rewrites. If the AI revises a long list of scenes, there is a risk it will totally restructure the story or drop important points. It’s better to break the list into overlapping sections, and revise each section in turn. For instance, revising scenes 1-8, then 4-12, then 8-16, and so on. This establishes clear start and end points within which the model can make changes. Because it is considering each scene as fixed, then later as modifiable, the story as a whole remains malleable without major pieces getting destroyed.

(This was a great suggestion from my friend Steve Newcomb.)

Second draft. The AI takes discrete units of the story – like a fully written scene – and changes them in specific, limited ways. For instance, it could rewrite a scene so that character A talks with one accent, and character B talks with another accent. Or it could rewrite a scene to remove redundant/repetitive language.

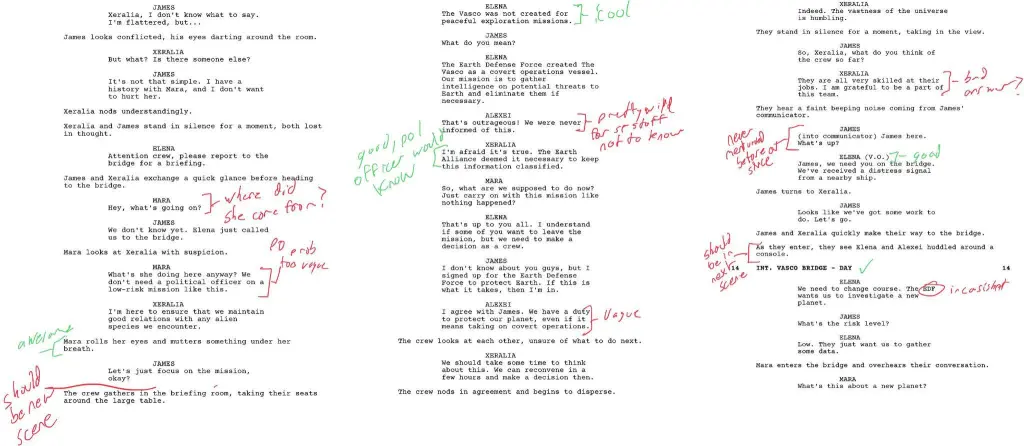

Compact and trim. The AI often struggles with endings. There is a lot to integrate, which is a weak point. It also likes to circle around the same concluding moments, generating many overly similar ending scenes. To avoid this, the LLM reviews the last 15% or so of the story outline, and merges the concluding scenes (of which there could be 5 or 6!) down to just one or two.

Rewrites like these aren’t perfect. Sometimes the LLM goes too far in simplifying/merging scenes and generates a very short episode. And it still sometimes generates repetitive or simply boring scenes. But on balance, rewrites have a very positive impact on quality.

Coming Up Next

After building some foundational tools, I had to contend with making the AI have a consistent view of the story world. Then, I had to use different strategies to make it write and rewrite the script, until the show was ready to “film.”

Next, I’ll talk about the visualizer – how I actually convert a script into a watchable episode.

One response to “AI Narratives: Orchestrating a Story (Part 2)”

[…] (This is part 3 of a series on an AI generated TV show. Click here to check out part 2.) […]