(This is part 1 of a series. Part 2 is available now!)

I made an autonomous space opera generator called On Screen!. Give it a topic, and it generates a 10-15 minute episode ready for upload to YouTube. Let me tell you about it and the journey behind it.

January 2023: LLMs start to deliver on natural language AI. Influencers predict the end of knowledge work. Researchers publish hundreds of papers weekly, soon increasing to thousands. The pace has only increased since then.

With all this activity, AI was making me nervous. How would it impact my livelihood? I found myself jumping at shadows. It was clear the energy I spent worrying would be better spent building something concrete.

Lots of people are doing knowledge retrieval, code generation, text narratives, and the like. I decided on creating an AI TV show to stand out, and because of a few other reasons:

- Many sins are easily overlooked in enterprise AI, but become big problems in entertainment – repetition, inconsistency, and lack of followthrough first among them. I would have to understand and address problems around understanding, retrieval, memory, prompt design, and so on.

- Video content requires many disciplines and forms of content – scripts, shooting instructions, actors and sets, voice over – so I would need a broad understanding of AI capabilities.

- I could leverage existing game dev experience to build the 3d/video generation side.

- People like pictures and video more than text!

- Shooting for ambitious goals would drive me to learn more, even if the final product fell short of perfection.

So, I decided On Screen! would be the thing to build.

Don’t worry if you’re not familiar with AI. I’m going to show you how I made it generate a TV show, the lessons I learned, and the experiences I had with it, not go into heavy technical detail.

I’m using loose definitions to stay accessible to the layman, but for experts, please replace “AI” with “mixed use of deep learning and traditional techniques”, and “words” with “tokens”.

PS – If you are finding this first part slow going, don’t be shy about skipping ahead to part 2.

An Imagination Machine

Movies are often predictable. Yet you can have two versions of the same story, and one is good, and the other is terrible. Is good storytelling formulaic or inspired?

LLMs (Large Language Models, like ChatGPT) are good at formulaic output, because they mimic what they have seen. They aren’t good at inspired, creative output, because it is new territory. They tend towards what they were trained on, so subtly that it might not even be noticeable. Careless use of AI creates shoddy stories that fail to draw people in.

Compare the above big budget movies with the “mockbusters” that compete with them. They start with the same premise, but one is objectively much better. Tight characterization, pay off, good dialog, strong music, exciting pacing, effective branding, and distribution/marketing are the factors that take you from Leo the Lion to Lion King.

Good storytelling requires getting the details right. How do you use AI to make blockbusters, not mockbusters?

First, you need some basic ingredients found in any AI project. You have to make smart choices with libraries and architecture. Evaluate models for flexibility and stability. Manage cost vs quality vs speed tradeoffs. Coordinate many prompts with complex data formats. Implement support systems like caching and debugging.

It needs to track plot threads and follow up on important events. But maintaining basic consistency and tracking plot threads for a 15 minute episode is HARD!

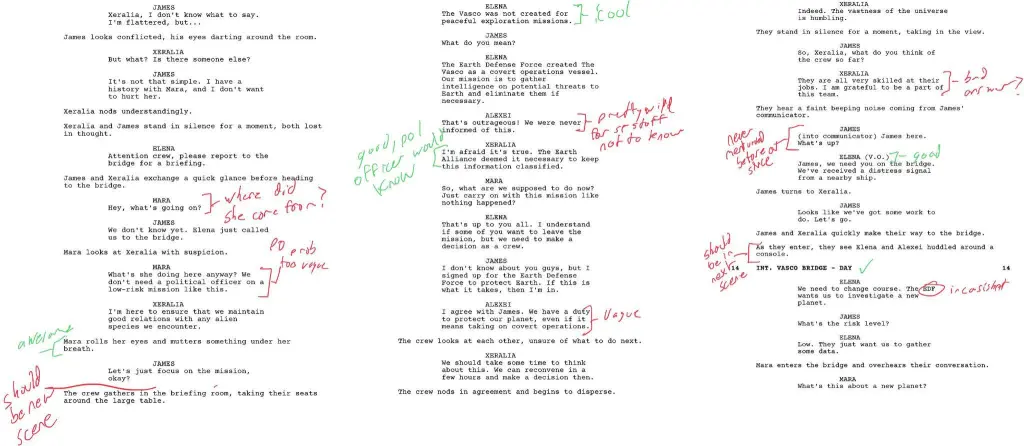

Below, you’ll see annotated scripts marked with failures (red) and victories (in green). In the course of development, it generated hundreds of pages of failed attempts. Frequently, the system made duplicated scenes, or scenes unrelated to the plot, or repetitive elements. One iteration was into having the characters cook. Like, REALLY into it.

Understanding the interplay of recall, prompt design, data flow, rewrites, and so on was an incremental process. The best approaches shifted as new models and prompting techniques came out. But step by step, it came together.

Establishing Context

Context size (as of early ‘24) is the biggest single factor impacting the architecture of LLM-based systems. Even with million token models coming out, cost, speed, and the ability of the AI to focus on complex tasks with many instructions mean that context size remains important.

Context size is the combined count of how many words you can give it PLUS how many you can get back from it. Often, once the LLM gets the series bible (the detailed background for the fictional world) and a detailed plot outline, there’s not much room to write anything.

Even with newer long context models, the AI struggles with complex instructions – so context remains an important concept.

Allow me to illustrate:

The gray box represents the context window (~2000 words in this example). We submit our series bible (blue) and plot outline (green). Suddenly we are down to ~300 words for output (orange). We want the rest of our story, but there’s not enough room for the model to write it!

Managing context length boils down to taking lots of words and turning them into fewer words by trimming and/or summarizing. Then there’s room to process more information. All chatbots do this behind the scenes. Below is an illustration of how summarization affects context:

To gain space, you have to sacrifice input by simplifying it. But dropping details gradually obliterates knowledge about the story and universe. “Indiana Jones has an encounter on a freighter with a man in a Panama hat who attempts to steal a golden crucifix belonging to Coronado.” is going to impact the story very differently than “Indy fights a man on a boat.”.

Should they find the journal, then the Grail, or the Grail, then the journal? Or the Grail, then the key to the truck? Or did the key get summarized out of existence entirely?

Consider idealized approach to writing a story. First, we write a plot synopsis. Second, we break it down into chapters. Third, we write scenes in each chapter. Finally, there are second drafts, outline rearranging, and so on. The below illustration visualizes the process:

There is a pernicious problem here. To write scene 8, we need information about the preceding 7 scenes, the show bible, the plot summary, ongoing story threads, and so on. And the model still needs room to write the story!

All this makes it very difficult to track whether plot threads have been resolved or not, to make detailed references to past events, and to resolve parts of the story. Some threads must remain unresolved, too, to keep the story going long term. Voyager would be a lot less interesting if they got back to Earth at the end of the first episode.

Coming Up Next

Out of a desire to understand AI, I made an AI tool for generating an episodic space opera show. Story telling is an especially demanding application of AI, and it requires dealing with many complex tasks simultaneously.

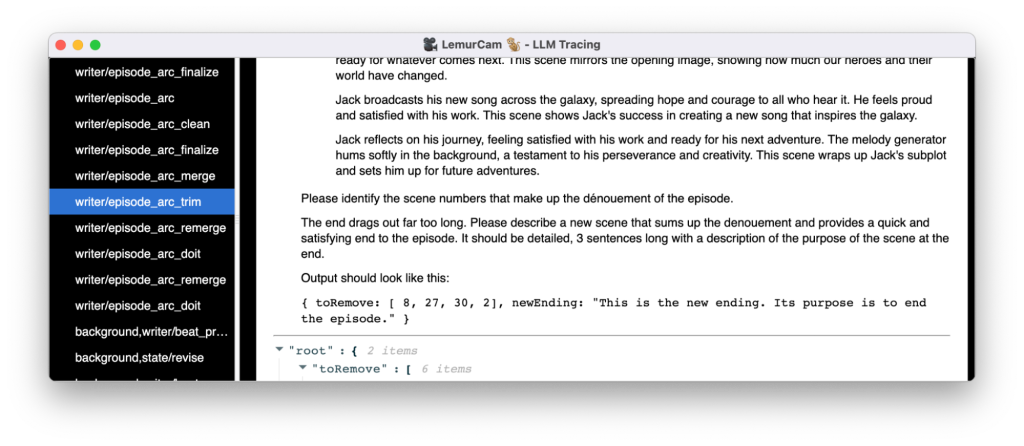

Next, I’ll talk about different prompting and memory strategies I used, what worked and what didn’t, as well as some of the tools I built.

2 responses to “AI Narratives: On Screen! (Part 1)”

[…] (Click here to go back to part 1) […]

[…] (This is part 2 of a series on an AI generated TV show. Click here to check out part 1.) […]